WHY LEAK TEST?

Production leak testing is implemented to verify the integrity of a manufactured part. It can involve 100% testing or sample inspection. The goal of production leak testing is to eliminate “leaky” parts from getting to the customer. Because manufacturing processes and materials are not “perfect,” leak testing is often implemented as a final inspection step. The consequences of a “leaky” part getting into the hands of a customer can vary greatly.

Here are a few examples:

• Inconvenience or Nuisance (a disposable beverage cup leaks)

• Loss of Product (leaky 55 gallon drum)

• Product Failure (waterproof wristwatch fails)

• System Failure (a leak in condenser coil causes a refrigeration system to fail)

• Personnel Hazard or Loss of Life (an automobile airbag fails to deploy in an accident)

The “cost“ of the consequence often determines the whether the product is leak tested, how the test is implemented, and even which test method is used. For example, an automotive airbag inflator is 100% leak tested using very sensitive and reliable methods, implementing a large safety factor in the leak rate limit, and sometimes even tested redundantly. On the other hand, a paper beverage cup is not 100% leak tested due to its low cost, simple manufacturing process (producing very few leaky cups), and low “cost” (or consequence) of a leaky cup.

In some cases leak testing is mandated by a regulation or industry specification. For example, in order to reduce hydrocarbon emissions from automobiles, auto makers are now designing and leak testing fuel components to tighter specifications required by the EPA. Also, the nuclear industry enforces regulations and leak test specifications on components such as valves used in nuclear facilities. Whether mandated by regulation or implemented to insure product function and customer satisfaction, leak testing is commonly performed on manufactured parts in many industries including automotive, medical, packaging, appliance, electrical, aerospace, and other general industries.

WHAT SIZE LEAK IS A PROBLEM?

One of the greatest challenges in production leak testing is often correlating an unacceptable leaking part in use by the customer (in the field) with a leak test on a production line. For example, the design specification of a water pump may require that no water leaks externally from the pump under specified pressure conditions. However, in production it may be desirable to leak test the part with air. It is intuitive to assume that air will leak more readily through a defect than water. One cannot simply state “no leakage” or even “no leakage using an air pressure decay test”. This would result in an unreasonably tight test specification resulting in an expensive test process and potential scrap of parts that may perform acceptably in the field. Therefore, one must set a limit using an air leak test method that correlates to a water leak. Establishing the proper leak rate reject limit is critical to insure part performance and to minimize unnecessary scrap in the manufacturing process. Determining the leak rate specification for a test part can be a significant challenge. Having a clear and detailed understanding of the part and its application is necessary in order to establish the leak rate specification. Even then, many specifications are

estimates and often require the use of safety factors. First, one must evaluate the affect of a leak on the part and thoroughly understand and identify potential leak paths.

The following are examples of how a leak may affect the performance of a part or system.

A. The depletion of a pressurized gas in a sealed reservoir

1. The shelf life of a propane gas cylinder

2. The operating life of a pressurized gas air-bag inflator

3. The loss of electrical insulating gas in a high voltage switch

4. The loss of refrigerant in a refrigeration system

B. The out-leakage of a pressurized gas or in-leakage of air in a process system

1. A valve or fitting in a high purity gas manifold

2. A valve, fitting, or regulator in a hydrogen fuel cell

3. Vacuum components of a vacuum process system (valves, fittings, gauges, chamber)

C. The out-leakage of liquid handling device

1. Medical devices handling blood or other solutions

2. Automobile fuel handling components (fuel tank, hose, etc.)

3. Water pump, radiator, etc.

4. Product loss from a storage container such as an ink jet printer cartridge

D. The in-leakage of a liquid into a device

1. Pacemaker and other medical implants

2. Submersible instrumentation and devices

E. The in-leakage of gas (air) or humidity into a device

1. Electronic enclosure

2. Food and pharmaceutical packaging

F. The leakage of a gas or liquid across an internal seal

1. Multi-stage gas regulator

2. Valve manifold

Second, with a thorough understanding of the part, the leak rate specification can be developed using one or more of the following methods:

a. Theoretical calculations of part performance under the leak limit conditions,

b. Empirical testing (functional, reliability and/or life) of the part under known leaking conditions,

c. Examining the leak rate specifications of similar parts,

d. Testing known failures or field returns,

e. Running laboratory tests on parts with known leaks installed into them,

f. Examining established industry or regulatory specifications.

The following is a simplified calculation based on an understanding of an air bag inflator’s performance and required life span. For example, a gas pressurized air bag inflator cylinder may have a life of 15 years (4.74 x 108 seconds). The initial fill pressure of the inflator is 2,000 psig. The design of the inflator allows for the pressure to be as low as 1,800 psig and still function properly (a pressure loss of 200 psi or 13.6 atm). The gas volume is 50 cc. The amount of gas loss over the 15 year period is 13.6 atm x 50cc = 680 atmcc. Assuming a constant leak rate over time and a constant temperature, the maximum allowable leak rate is 680 atmcc / 4.74 x 108 sec = 1.4 x 10-6 atmcc/sec. If 100% of the gas inside the inflator is helium, then a hard vacuum helium test at a limit of 1.4 x 10-6 atmcc/sec would be a good choice. However, if only 10% of the gas was helium, then the reject limit would need to be set at 1.4 x 10-7 atmcc/sec. Using one of the above methods it may be possible to come up with a leak rate reject limit that is not practical or cost effective to implement. For example, a leak rate spec of 1 x 10-10 atmcc/sec helium may be achievable under laboratory conditions with a helium leak detector but is not a practical limit for production. In these cases, one must look at the big picture and be willing to make trade-offs in part design, performance, while considering the limits of leak test technology. Another point worth mentioning is the fact that the part itself does not know the leak rate limit that has been assigned to it. In fact, often the design of the part seals (welds, o-rings, glue joints, etc) do not take in account the leak rate limit applied to the part. In other words, when the part fails it is unlikely it will fail at or near the limit, but often fails at some value much larger than the limit. So even though the test method has the sensitivity to meet the reject limit specification, it often spends most of its life rejecting leaky parts with leaks much larger than specification. Once a leak rate limit or specification has been established it is critical that this information is expressed correctly to insure the proper test is implemented. Simply stating, “leak test to 1.0 x 10-7 atmcc/sec” does not provide sufficient information. A more specific description would be: “Helium leak test using outboard (out-leakage) vacuum test method with the part pressurized to 90 psia 100% helium. The leak rate shall not exceed 1.0 x 10-7 atmcc/sec He.” This description specifies the test method, flow direction, helium gas pressure (and concentration) and leak rate limit. It may also be appropriate to specify a “time in test”. This requires the operator or test system to monitor the test part over a minimum test time. Another appropriate description might list the leak rate limit with a test method, such as ASTM E498 Method A (or some other method).

Another common way to express a leak rate limit is in mass loss per year. A specification in the refrigeration industry might be “leak rate shall not exceed 0.1 oz. per year R134a.” Although this expresses the maximum loss of gas per year, it does not specify the test method. One typically assumes that in order to meet this requirement the production leak test must be under “operating conditions”. If, for example, a helium test method is used, then several calculations may be required to convert the mass loss of refrigerant to helium mass loss, then to a volumetric leak rate commonly used with helium leak detectors. It then may be desirable to dilute the helium to reduce it’s usage. A test pressure must also be established. Finally, the actual helium test method must be selected.

Lastly, the automotive industry has implemented a leak rate specification for fuel handling components that species a maximum allowable theoretical leak diameter. The advantage of this way of expressing the leak rate limit is that it gives the part manufacturer significant leeway in designing the appropriate leak test. The challenge, however, is correlating the theoretical leak diameter to an actual leak rate. Users of these specifications must understand the theoretical relationships between leak hole geometry and gas flow and all users must implement these relationships consistently. A second option is to set the leak rate limit of the specific test using a leak orifice or channel that has been manufactured and dimensionally calibrated to the geometry (diameter and path length) required by the specification.

HOW SHOULD I LEAK TEST?

Once a leak rate limit or specification has been determined one can take a closer look at the available test methods to implement. However, the leak rate limit is not the only criteria that might affect the test method chosen.

Here are some other considerations:

• What additional product cost (including production time) due to the leak test step can be tolerated?

• Based on an understanding of part design and manufacturing processes, what are the potential causes and locations of leaks?

• Will leaky parts be scrapped or reworked?

• What cycle time for the leak test is acceptable and how will the leak test process fit in the production line?

• Will the parts be 100% tested, batch tested, or tested individually?

• Is there an advantage to implement subcomponent leak tests earlier in the product assembly?

• What design and manufacturing limitations (pressure limits, part cleanliness, part temperature, part rigidity) might affect the type of test method we use?

• Do I need a quantitative measurement of part leak rate?

• Do I need to know the location of a leak?

• Is it practical to leak test the part under conditions it will see in actual use?

Each leak test method has various characteristics, advantages and disadvantages. These methods can be categorized several ways:

• Does the method provide a measurement of the leak rate or only a visual indication?

• Does the method identify the location of the leak(s).

• Does the method perform a global test on the part (considering a sum total of all leaks), or does it simple measure “one” leak at a time?

• Does the method require the operator to make a judgment call based on a visual indication or is there a Go / No Go determination by the system?

• What is the sensitivity of the method (how small of a leak can it detect)?

• How fast is the method and is the test speed dependent on part size?

• How is the capability of the test method affected by factors such as part temperature, part cleanliness, part materials of construction, ambient humidity, ambient trace gas concentrations,

• Does the method use air or trace gases?

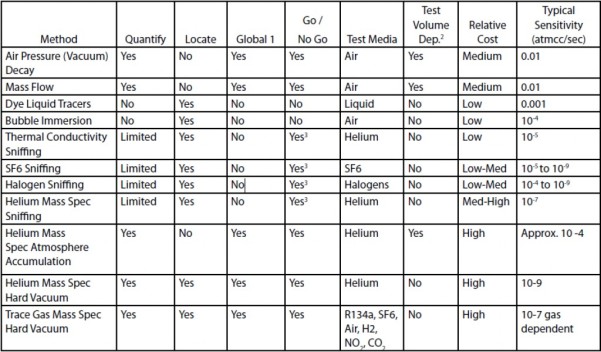

The following table lists common production leak test methods with these associated characteristics.

1. The test method measures the global leak rate of the part, versus individual leaks.

2. The test volume significantly affects the test cycle time and/or sensitivity.

3. Result may be operator dependent.

AIR LEAK TESTING METHODS

A. Pressure (Vacuum) Decay Testing

Pressure decay leak testing relies on the principle that a leaky part pressurized with air, then isolated with a valve, will show a loss of total air pressure over time (see Figure 1 below). The pressure decay rate, sometimes expressed in psi/second, can be converted to a leak rate according to the following equation:

Equation 1:

Q = (P1-P2)V/t

where Q = the leak rate, usually expressed in stdcc/min.

P1-P2 = the change in pressure over the test time

V = test volume

t = test time

Temperature is assumed to be held constant

However, it is not common for the test volume to be known. To calibrate the system a known master leak standard or calibrated leak (typically calibrated at the reject value) is inserted into the test circuit or into an actual part. When the test cycle is run with this standard installed the system is calibrated. The simulated leak causes a pressure drop, which is equivalent to the reject level. There are several variations of pressure decay testing. Commonly, an “open” style part is pressurized and the leakage is measured with the flow inside-out. If the part is sealed, one can perform a chamber pressure decay test where the sealed part is placed in an appropriate test chamber. The test chamber is pressurized and leakage is measured outside-in. This technique can also be used with vacuum (measuring leakage inside-out). Where high test pressures or large part volumes are involved,a differential pressure decay test can be employed. In this case a reference volume (or a known good part) that is equivalent to the part is pressurized at the same time as the test part. The system then looks at the pressure loss of the known good part

compared to the part under test. Pressure decay leak testing is a very common, relatively simple and low cost technique. However, for large test volumes and/or tight leak rates (less than 0.01 atmcc/sec) the test time can become excessive. Even with the best pressure transducers and high resolution A/D converters, several factors can make it a difficult test. Test results can be subject to factors including environmental and part characteristics. Any factor that will affect the test volume or pressure will affect the measured leak rate.

Some of these are:

• The part or test tooling flex and are not mechanically stable (e.g. a balloon or other flexible plastic part)

• Apart that is hotter or colder than ambient temperature (e.g. resulting from a welding operation)

• Parts that have inconsistent part to part volume

B. Mass Flow Leak Testing

In mass flow leak testing the part is pressurized as in pressure decay leak testing. However, the leakage or gas flow is measured directly using a flow sensor, commonly a mass flow meter. The air supply is not isolated from the test part, thus the flow out of the part (the leak) is equal to the flow into the part as measured by the flow sensor. This direct method does not require a decay time, however, a stabilization time is often required. This method is most commonly used for large leak rates (1 sccm or 0.02 stdcc/sec and larger). There are several variations similar to the pressure decay method. This method is subject to the same factors that can affect pressure decay testing.

C. Bubble Immersion Testing

The simplest of the test methods, bubble immersion leak testing is typically the lowest cost as well. In simple terms, the part under test is pressurized with air while being submerged in a liquid, typically water. The operator looks for a stream of bubbles indicative of a leak. Because this method requires operator involvement and is subject to operator error, and because of the inconvenience of dealing with wet parts, this technique is commonly replaced with other test methods. The sensitivity of this method can be better than pressure decay (10-4 atmcc/sec), and even faster for large parts. There are several variations of this method including: using liquids other than water, using vacuum to reduce the water pressure on the part, manually apply a liquid solution to the part instead of dunking it.

TRACER GAS LEAK TESTING METHODS

A. Manual Sniffing

Manual sniffing to detect gas leaking out of a test part on a production line is a common and relatively low cost test method. The sensitivity of this method varies greatly depending on the tracer gas used and the type of instrument. The greatest disadvantage of this test method is that the results are often operator dependent. The operator must be skilled and patient in scanning the part for leaks, in order no to overlook a leak. The value of the leak as measured by the instrument depends on how close the operator places the probe to the leak and how fast he is scanning the part (moving the probe). For this reason it can be difficult to get repeatable, quantifiable results. This method should not be used as a final qualification leak test, but can be used as a pre-screen in combination with other test methods. When using this technique it is recommended that the operator have in his possession a gas leak standard calibrated to the reject value of interest. Periodically, the operator can use this standard to verify or calibrate the sniffer instrument. When this method is employed, great care should be taken to insure that excess amounts of the tracer gas are not in the ambient air as a result of large leaks or carelessly venting gas from the part into the room. High tracer gas backgrounds can make it difficult and sometimes impossible to locate a leak. Helium is the most common tracer gas used for sniffing. However, hydrogen mixed with nitrogen is also used. In the refrigeration and other industries, the process gas is often used as the tracer gas. Thus it is common to find sniffer instruments design to detection refrigerants and also SF6. When selecting a sniffer instrument, in addition to sensitivity to the gas of choice, one should also consider the selectivity of the instrument. The selectivity represents the instrument’s ability to select and identify the tracer gas without interference from other gases. A helium mass spectrometer instrument is a good example of a highly selective instrument. Because it filters the mass of the gas molecules, virtually no other gases interfere with the signal. On the other hand, a thermal conductivity

instrument measures the presence of a tracer gas by comparing its thermal conductivity compared to the ambient air. Helium and hydrogen have very similar thermal properties and, thus, the instrument cannot distinguish between the two gases. In addition, the presence of humidity or other vapors can interfere with the instrument and either give false signals or desensitized readings. Other gas sensing technologies may have similar interference issues with some gases and vapors. It is important to understand the limitations of the various instruments in regards to gas selectivity.

B. Helium Atmospheric Accumulation

Helium atmospheric accumulation, like sniffing, measures a leak flowing from a pressurized part into atmospheric pressure. However, unlike sniffing, this technique uses a chamber or enclosure surrounding the test part to accumulate and measure the total of all leaks emanating from the test part. (See Figure 2 below.) A significant advantage of this technique is that, when properly implemented, it can produce calibrated and repeatable results. Like pressure decay techniques, this method monitors the increase in gas pressure and thus is volume dependent. However, the pressure measurement is not the total gas pressure, but the partial pressure of the tracer gas. Measuring the partial pressure or concentration at very low levels (ppm level) makes this technique more sensitive than pressure decay. Another significant advantage of this technique compared to pressure decay is that it is much less sensitive to temperature and volume effects.

Using Equation 1 one can estimate the sensitivity of a particular test, where the volume is the dead volume of the accumulation chamber, the time is the test time or actual accumulation time, and the pressure rise is a measurement of the increase in tracer gas concentration (partial pressure). The pressure or concentration rise is dependent on the type of instrument used. A mass spectrometer helium leak detector has a typical ultimate sensitivity of 0.1 ppm (1 X 10-7 atm). However, in practical applications using the accumulation technique one should not try to resolve changes smaller than 1 ppm. (1 x 10-6 atm). For example, a test with a 10 second accumulation time using a 1 liter dead volume chamber would result in Q = 1 x 10-6 atm x 1,000 cc / 10 seconds = 1 x 10-4 atmcc/sec. Total test time, however can be as long as 20 seconds when accounting for part fill, stabilization, part vent, and chamber purge times. Key to insuring this technique works properly it to have the helium in the accumulation chamber well mixed so that the sample taken is representative and independent of the location of the leak(s). In principle, this technique can also be implemented with other sniffing instruments and tracer gases. Calibration of this technique is accomplished by introducing a leak (at the reject limit) into the accumulation chamber during a test on a known good part. The resulting signal represents the leak rate reject limit.

C. Helium Hard Vacuum

This technique is the most common of the tracer gas techniques used in production leak testing. This technique uses a helium mass spectrometer (HMS) leak detector as the sensor. The mass spectrometer operates at vacuum and it is convenient for this and other reasons to conduct the test under vacuum. See Figure 3 for typical configurations. The hard vacuum test can be implemented with either sealed or “open style” test parts. Sealed parts are typically sealed with some trace amount of helium for purposes of leak testing. In some cases, small sealed parts such as electronic devices are sealed with ambient air, then at a later point bombed with helium to introduce helium trace gas into the part (if it leaks) for subsequent leak testing. In the case of a sealed part, the part is placed in a vacuum chamber or fixture and the gas flow is inside-out (out-leakage). In the case of an “open style” part (a part that has not be completely sealed), helium is introduced to the part at leak testing. The part can be pressurized with helium during the test and a vacuum chamber surrounds the part (inside-out flow, or out-leakage). Alternately, the test part may be evacuated and the part surrounded with helium (outside-in flow, or in-leakage). The choice of which direction to measure the leakage depends on the function or use of the part, it’s capability to withstand the pressures created during the leak test, as well as other factors. As a rule of thumb, when possible, leak test the part as close as practical to the actual conditions it will see in use. For example, a gas regulator may be leak tested outside-in or inside-out. However, the best method would be to pressurize it to it’s design pressure and place it in a vacuum chamber and perform the leak test insideout.

Successfully implementing hard vacuum helium leak testing in a production environment requires attention to several system design elements, including:

• Size and design of the vacuum chamber and/or tooling

• Configuration of the HMS leak detector

• Implementation of roughing vacuum pumps

• Implementation of calibrated leak standards for system calibration

• Proper management of helium to prevent excessive helium in ambient air and minimize excessive helium from reaching the leak detector

• Optimization of the vacuum circuit

• Protection of the system components from contaminants When properly designed, hard vacuum helium leak testing can be the most sensitive, fastest, and the most reliable production leak test method available.

CONCLUSION

Proper selection and implementation of a production leak test method starts with an understanding of WHY the test is being performed, followed by establishing what the leak rate limit is, and finally a determination of how the leak test will be performed. A careful and thoughtful evaluation at each of these steps, combined with the selection of high quality leak test hardware, will result in a cost effective, high performance, and reliable production leak test.